Abstract

The popularity of deep learning has increased tremendously in recent years due to its ability to efficiently solve complex tasks in challenging areas such as computer vision and language processing. Despite this success, low-level neural activity reproduced by Deep Neural Networks (DNNs) generates extremely rich representations of the data. These representations are difficult to characterise and cannot be directly used to understand the decision process. In this paper we build upon our exploratory work where we introduced the concept of a co-activation graph and investigated the potential of graph analysis for explaining deep representations. The co-activation graph encodes statistical correlations between neurons’ activation values and therefore helps to characterise the relationship between pairs of neurons in the hidden layers and output classes. To confirm the validity of our findings, our experimental evaluation is extended to consider datasets and models with different levels of complexity. For each of the considered datasets we explore the co-activation graph and use graph analysis to detect similar classes, find central nodes and use graph visualisation to better interpret the outcomes of the analysis. Our results show that graph analysis can reveal important insights into how DNNs work and enable partial explainability of deep learning models.

1. Introduction

Modern Deep Neural Networks (DNNs) can leverage large amounts of data to be efficiently trained to perform hard tasks such as translating languages and identifying objects in an image [1,2]. DNNs have been shown to achieve good performance in such complex tasks where traditional machine learning methods may fail due to the high dimensionality of the data [3].

6. Conclusion

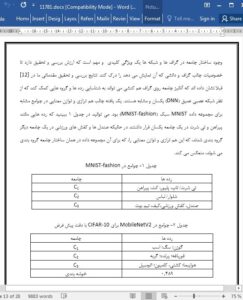

In this paper we formalise and experiment on a novel approach to analyse and explain the inner workings of deep learning models. The proposed methodology relies on the notion of coactivation graph introduced in [12] and formalised in Section 3 to extract and represent knowledge from a trained Deep Neural Network (DNN). In the co-activation graph nodes represent neurons in a DNN and weighted relationships indicate a statistical correlation between their activation values. This representation connects neurons in any layer of the neural network, including hidden (convolutional and dense) layers, with the output layer.