Abstract

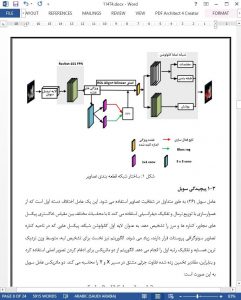

The computer-aided diagnosis of prostate ultrasound images can aid in the detection and treatment of prostate cancer. However, the ultrasound images of the prostate sometimes come with serious speckle noise, low signal-to-noise ratio, and poor detection accuracy. To overcome this shortcoming, we proposed a deep learning model that integrates S-Mask R-CNN and Inception-v3 in the ultrasound image-aided diagnosis of prostate cancer in this paper. The improved S-Mask R-CNN was used to realize the accurate segmentation of prostate ultrasound images and generate candidate regions. The region of interest align algorithm was used to realize the pixel-level feature point positioning. The corresponding binary mask of prostate images was generated by the convolution network to segment the prostate region and the background. Then, the background information was shielded, and a data set of segmented ultrasound images of the prostate was constructed for the Inception-v3 network for lesion detection. A new network model was added to replace the original classification module, which is composed of forward and back propagation. Forward propagation mainly transfers the characteristics extracted from the convolution layer pooling layer below the pool_3 layer through the transfer learning strategy to the input layer and then calculates the loss value between the classified and label values to identify the ultrasound lesion of the prostate. The experimental results showed that the proposed method can accurately detect the ultrasound image of the prostate and segment prostate information at the pixel-level simultaneously. The proposed method has higher accuracy than that of the doctor’s manual diagnosis and other detection methods. Our simple and effective approach will serve as a solid baseline and help ease future research in the computer-aided diagnosis of prostate ultrasound images. Furthermore, this work will promote the development of prostate cancer ultrasound diagnostic technology.

7. Conclusion

A deep learning model that integrates S-Mask R-CNN and Inception-v3 in ultrasound image-aided diagnosis of prostate cancer was proposed in this paper. A set of ultrasonic images of the prostate with segmentation and classification were constructed, and the model of this paper was trained on the set. This network model used the ROIAlign algorithm to achieve accurate positioning of feature points in prostate ultrasound images. The combined ResNet-101 and RPN networks improved the segmentation accuracy, and the corresponding binary mask of prostate ultrasound images was generated through FCN to achieve the segmentation effect of the prostate and background regions. The original model classification softmax layer was replaced with an improved Inception-v3 classification network model. Based on the comparison test of each classical network model, the method used in this paper can improve the detection without significantly increasing the complexity of calculation and model. The next step is to expand the current data set and use cubic linear interpolation algorithm instead of bilinear interpolation algorithm to improve the segmentation accuracy and thus improve the effect of model detection. Based on existing research, the model was optimized and applied to the 3D field.