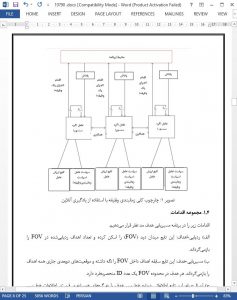

Wireless sensor networks (WSNs) are an attractive platform for monitoring and measuring physical phenomena. WSNs typically consist of hundreds or thousands of battery-operated tiny sensor nodes which are connected via a low data rate wireless network. A WSN application, such as object tracking or environmental monitoring, is composed of individual tasks which must be scheduled on each node. Naturally the order of task execution influences the performance of the WSN application. Scheduling the tasks such that the performance is increased while the energy consumption remains low is a key challenge. In this paper we apply online learning to task scheduling in order to explore the tradeoff between performance and energy consumption. This helps to dynamically identify effective scheduling policies for the sensor nodes. The energy consumption for computation and communication is represented by a parameter for each application task. We compare resource-aware task scheduling based on three online learning methods: independent reinforcement learning (RL), cooperative reinforcement learning (CRL), and exponential weight for exploration and exploitation (Exp3). Our evaluation is based on the performance and energy consumption of a prototypical target tracking application. We further determine the communication overhead and computational effort of these methods.

1. Introduction

A wireless sensor network (WSN) is an attractive platform for various applications including target tracking, environmental monitoring, data aggregation, and smart environments. The application is composed of tasks which need to be executed during the operation on the sensor nodes. The sensor nodes are typically supplied by batteries and thus pose strong limitations not only on energy but also on computation, storage, and communication capabilities [1–4].

7. Conclusion

In this paper we applied online learning algorithms for resource-aware task scheduling in WSNs. We analyzed and compared the performance of online task scheduling methods based on the three learning algorithms: RL, CRL, and Exp3. Our evaluation results show that these methods provide different properties concerning achieved performance and resource-awareness. The selection of a particular algorithm depends on the application requirements and the available resources of sensor nodes.

Future work includes the application of our resourceaware scheduling approach to differentWSN applications, the implementation on our visual sensor network platforms [23], and the comparison of our approach with other variants of reinforcement learning methods.